Over the past few decades, online learning has evolved from the so-called 1.0 phase (in-person classes augmented by static web pages and PDFs) to the more revolutionary 2.0 stage (i.e., the dawn of online courses, classroom-blended “talking head” videos, and rudimentary analytics) to today, as the 3.0 era (high-tech custom learning experiences) begins to take shape.

This year, the latest evolutionary advancements were once again spotlighted, explored and celebrated at the (17th) annual e-Learning 3.0 Conference – and the Learning Lab’s very own IT Director, Joe Lee, was on-hand as one of 30 chosen speakers presenting at the May 14 event, along with luminaries from other regional colleges, and panel discussions with LMS vendors, researchers, and stakeholders.

Hosted by the University of the Sciences and kicked off by a keynote address from renowned edtech consultant Phil Hill, the conference showcased the use of technology to enhance teaching and learning in higher ed, allowing participants to share best practices and creative approaches for learning enrichment.

Joe’s presentation, “This is Not a Simulation: Supporting Games/Sims in the Classroom Setting,” pulled back the curtain on what goes into making effective, engaging e-learning tools in the 3.0 era. Using case studies from the Lab’s own experiences delivering and supporting some of its most popular customized learning experiences, he posed key questions that are critical to the success, or failure, of a sim or teaching game:

How do faculty get comfortable to take the leap into the technological unknown? What problem are you trying to solve? How do students get help? Does this game/simulation achieve the professor’s goals? Can this be supported at scale?

Given that the Lab annually supports more than 10,000 student plays of over 33 different games for Wharton faculty in almost every discipline (and does so with a small team of fewer than 5 people), Joe offered a unique, insider perspective on what it takes to ensure that each run of a sim or classroom game goes as smoothly as possible. From preparation, evaluation and testing, to technical issues, setup, and in-class support, he shared the lessons we’ve learned and the best practices we follow (well-honed through years of trial and error).

In case you missed it, these were Joe’s key takeaways:

- Never lose sight of the learning objectives.

- There must be painful dedication to testing and retesting (and re-retesting!) of a sim or new teaching tool prior to classroom delivery – aka, the “trust but verify” approach.

- Close. The. Loop.

- Keep in mind that your e-Learning technology is but one piece of the class – so never lose sight of the big picture!

- Lastly: There is always an area where you can do better!

The Lab was proud to be part of this exciting day of collective edtech wisdom – and, together with the dozens of other presenters at the forefront of the 3.0 era, is happy to be part of an ever- growing community engaged in improving teaching and learning by inventing and deploying new pedagogies and technologies.

Surfing along the crest of this radical wave of new technologies is augmented reality (AR). Sometimes referred to as “blended reality,” it allows users to experience the real world, printed text, or even a classroom lesson with an overlay of additional 3D data content, amplifying access to instant information and bringing it to life; in turn, bringing thrilling new opportunities for experiential education.

Surfing along the crest of this radical wave of new technologies is augmented reality (AR). Sometimes referred to as “blended reality,” it allows users to experience the real world, printed text, or even a classroom lesson with an overlay of additional 3D data content, amplifying access to instant information and bringing it to life; in turn, bringing thrilling new opportunities for experiential education. Many students learn best when they’re able to access visual rather than verbal information. Whereas classroom materials that integrate visuals might include presentation slides, textbooks, handouts and the like, AR takes visuals to the next level.

Many students learn best when they’re able to access visual rather than verbal information. Whereas classroom materials that integrate visuals might include presentation slides, textbooks, handouts and the like, AR takes visuals to the next level.

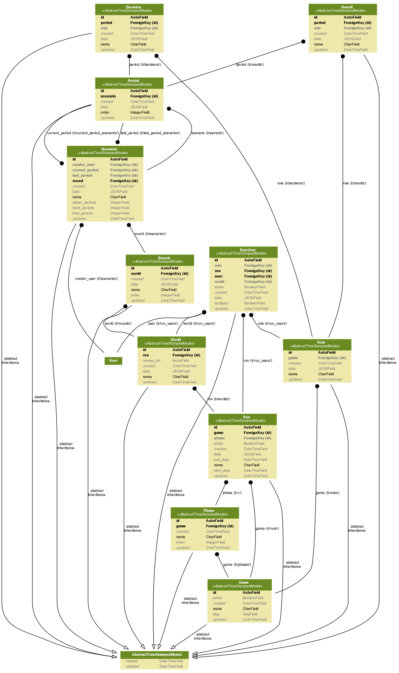

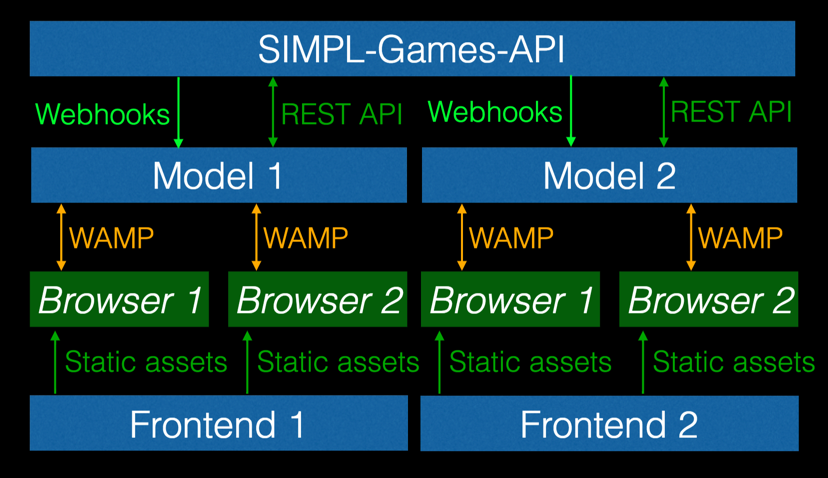

Code starts at the model-level. So before we wrote one line of SIMPL (the Learning Lab’s new simulation framework), we needed to figure out what, exactly, our data model would look like. Considering the ambitious goal of the project — a simulation framework that could support all of our current games as well as games yet unknown — we had to be very careful to create one that would be flexible enough to adjust to our growing needs, but not so complex as to make development overly challenging.

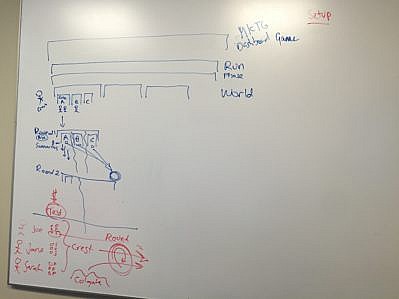

Code starts at the model-level. So before we wrote one line of SIMPL (the Learning Lab’s new simulation framework), we needed to figure out what, exactly, our data model would look like. Considering the ambitious goal of the project — a simulation framework that could support all of our current games as well as games yet unknown — we had to be very careful to create one that would be flexible enough to adjust to our growing needs, but not so complex as to make development overly challenging.  The results of one of our white-boarding sessions.

The results of one of our white-boarding sessions.

If you’ve ever sat through one of Wharton marketing professor Peter Fader’s highly engaging

If you’ve ever sat through one of Wharton marketing professor Peter Fader’s highly engaging  For the past year, students taking Wharton

For the past year, students taking Wharton